ChatGPT still sucks at writing

And you'll suck too if you don't use it with intentionality

Human language has allowed us to rapidly transmit knowledge across generations, shifting the selective focus from biological adaptations to cultural and linguistic evolution. This evolution is, in itself, a natural form of technological advancement, enabling us to bypass the time-constrained processes of genetic evolution.

However, inorganic advancements in language—AI tools like ChatGPT—could potentially hinder our natural cognitive development. As we increasingly rely on external tools to convey information, our cognitive functions like problem-solving and creative expression may diminish.

It’s Early Innings in AI Writing

I was recently approached by a computer science student attending an esteemed university. This bright young man (let’s call him Fred) is developing an AI drafting platform for writers. Seeking writers willing to participate in the alpha testing of his tool, Fred reached out to me and we had a phone conversation.

Some five minutes into our call, he asked for my thoughts on using AI for writing. “It’s not there yet,” I politely responded. At the time, I was unaware of Fred’s plans to use me as a little lab rat for his AI tool. Prior to his outreach, I had completed a rather ambiguous Google doc survey targeting Substack writers.

“What do you mean?” he asked.

“Well, it lacks sentience,” I replied. I could sense his ears perking up through the phone. “Do you mind elaborating?”

Fred learned that I did not mind elaborating—not one bit. In so many words, my elaboration was:

Okay, so readers are drawn to the humanity in writing. People have short attention spans these days, and if they can’t relate to what they’re reading, they’ll move on. It’s not unlike lyrical music or a TV show; you only capture an audience when they envision themselves living within your narrative.

Readers crave the unexpected—the personal anecdotes, the vulnerable moments, the messiness of human thought. AI struggles to recreate these because they stem from lived experience, not pattern recognition.

Now, ChatGPT is a fantastic aid in crafting an argument or structuring an essay. In the most literal sense, GPT’s writing output can be extremely compelling once you provide it with your predisposition and the appropriate context.

But that’s just not how people connect with writing. Aside from legal and academic writing, readers read on when they are captured by the soul behind the writing. The sentience.

Surprised at my candor, Fred agreed. I went on to explain that I have no moral qualms regarding writers supplementing their work with AI. In fact, at this point, I presume that most of the writing I come across on Substack has, in some shape or form, been assisted by AI.

Does this presumption make me a cynic? I don’t think so. As a not-so-casual observer, I’m just calling it as I see it—through the lens of human psychology and adherence to the notion that paths of least resistance will be exploited by people until they’re given a reason otherwise. Does that sound cynical to you? It does? Hmm…

Decreasing Quality of Writing

Let’s zoom out. AI is everywhere—it’s been nothing short of a revolution over the past couple years, infiltrating industries spanning the economic spectrum. Writing is no exception. But frankly—and perhaps as a result—an alarming amount of the writing from self-proclaimed “personal essayists” whom I come across is shittily written, and reeks of zeros and ones.

The adoption of AI in writing doesn’t necessarily explain this noticeable drop in quality (correlation isn’t causation and all that jazz). But given the frequency with which using AI to write is being discussed—on Substack and elsewhere—I don’t see it as a leap. And when I say "shittily written," I don’t mean lacking in substance—at least, not here. No, from a practical standpoint, AI is quite good at hammering down on the nitty-gritty.

Rather, it’s missing palpable emotion. It’s missing tone, depth, vulnerability, spontaneity—it’s missing, well, sentience. In other words, it feels so hyper-optimized that it’s been stripped of its authenticity.

To the unsuspecting (and perhaps unwitting) reader, AI-generated text can pass itself off as having human origins. Some readers might not care, so long as the substance is there. In a world flush with human bias, some may even prefer AI for its supposed objectivity.

But for readers like myself who crave a relatable narrative (which I believe to be a growing majority), the kind of writing I’m talking about feels too perfect, too polished, and ultimately uninspiring. It’s been stripped of the qualities underlying our shared human condition—like messiness and unpredictability—which, in my view, should drive reader resonance.

Confession Time

Now, I don’t mean to throw stones (as I throw stones). Asked to describe myself as a writer, several words come to mind… ‘experienced’ is not one of those words. In fact, upon further introspection, it seems to be from a glass house that I hurl these rocks.

So, I’ll fess up. Most of what I've published on Substack has been touched, to some extent, by ChatGPT. This may come as no surprise, but it still feels a bit icky to say out loud. For what it’s worth, this article is no exception—it, too, owes something to ChatGPT. Ironically, when I revisit some of my own writing, I find it centered in the same crosshairs of criticism that I aim at others' work. Were I not so goddamn nitpicky, I’d write and exclusively publish words untainted by AI (excuses, excuses—I know).

In any event, I’ve picked up on an interesting dynamic: the more I lean on AI, the less attuned I am to my own writing style. Since launching my publication a month ago, there have been moments where I’ve struggled to re-center myself as a writer. Indeed, a growing part of me wishes I’d never even solicited writing provisions from the ever-so-talented Mr. GPT in the first place.

While AI has certainly boosted my writing productivity, the time spent recalibrating with my own voice—especially at the outset of a new draft—has more-than offset those gains. It’s a bit like the advent of cell phones and the ensuing mass-forgetting of phone numbers: when it comes to your capacity for authentic expression, you either use it or lose it. Accordingly, here we are—torn between the opportunity to unlock AI-induced efficiencies, and the desire to stay true to our own voices.

As for me, well, I’m a little slut for streamlining workflows. There’s something deeply satisfying about doing less work to yield the same output—it’s not just fulfilling, it can feel as magical as a Jimi Hendrix riff. To sweeten the deal, I’m violently incapable of publishing anything containing typos or grammatical blunders. Blame it on my days in equity research. Or some insecurity I developed as a child. Dunno.

Maybe one day I’ll shake my meticulous streak. But for now, the shiny new toy that is AI is too... well, shiny... to resist. So, let’s just assume that we (I) can’t help ourselves (myself) from using AI as a tool in the pursuit of writing that elusive ‘10/10 would recommend’ article.

What I’ve Learned

In this pursuit, I’ve picked up a few key insights—things worth considering whether you’re Fred, a fellow writer, or just a casual reader trying to suss out AI-assisted writing in search of the good stuff.

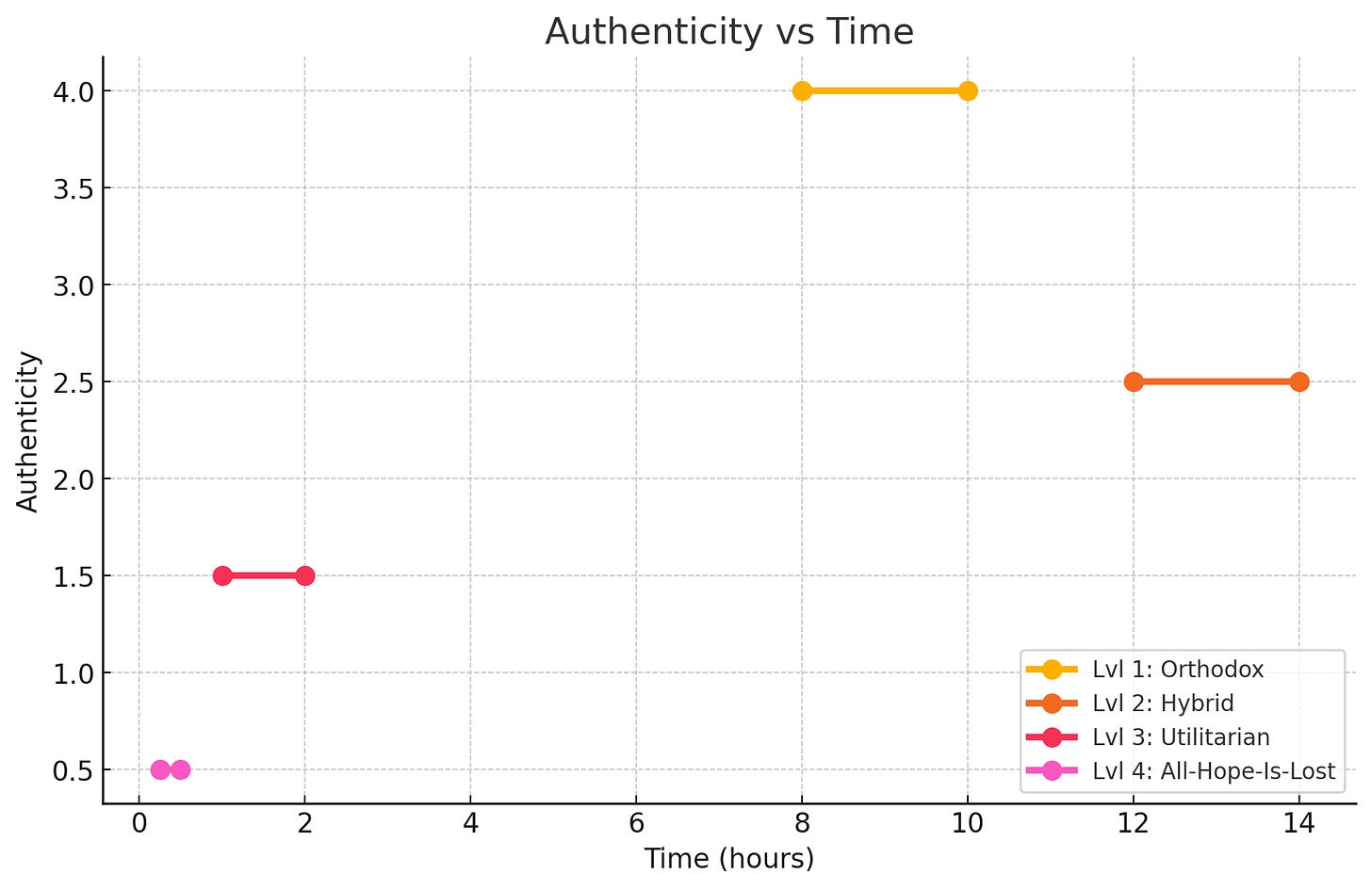

First, there are levels to this game of creative writing in the age of AI. Think of it as a spectrum, with boundaries ranging from purely human-authored content to full-on AI domination. Here’s how I break it down (assuming 1,000 word count in each case):

Level 1: The Orthodox Approach (8-10 hours of work)

The hallowed ground: pure, unfiltered authenticity. Akin to raw-dogging a flight, this is humanity left to its own devices—completely organic, with no AI involvement at any stage.

Human-originated predisposition

Human brainstorms, structures, and drafts (pen-to-paper if we’re really going for it)

Human-edited, assisted only by a thesaurus/dictionary (can you imagine?)

Level 2: The Hybrid Approach (12-14 hours of work)

The middle ground: human author, AI assistant. This is where many of us probably fall: AI is used to polish or organize ideas, but the human voice is still clearly in charge.

Human-originated predisposition, refined with AI’s help

AI assists with either brainstorming or structuring (but not both)

Human-drafted, with varying degrees of AI assistance

AI for basic editing (typos, grammar), with human handling the broad strokes

Level 3: The Utilitarian Approach (1-2 hours of work)

The danger zone: AI takes the reins, human polishes. AI handles the heavy lifting; the human steps in to keep things from veering off course.

AI-generated predisposition, lightly refined by human

AI handles brainstorming and structuring

AI-drafted, human makes revisions to tweak tone and content

AI does most of the editing, human gives a final skim

Level 4: The All-Hope-Is-Lost Approach (15-30 minutes of “work”)

Nihilism: AI is the author, assistant, judge, jury and executioner. Grifters thrive in this realm as AI writes their story for them.

AI-generated predisposition

AI brainstorms, structures, drafts and edits

You cry in the corner, then rage-publish whatever AI spits out

If we were to map this out on a graph, it would look something like:

For every writer, there’s a sweet spot where you can balance authenticity with efficiency. Finding that balance requires intentionality. Maybe some posts are research-heavy and intended to be informative, while others more personal, written from the heart. The key is having a sense of what kind of piece you’re creating before deciding on how much to involve AI.

For me, preserving the creative struggle is essential to maintaining alignment with my voice. Prior to editing, I try to stay in Level 1 for as long as possible. Many refer to this as the Shitty First Draft (SFD)—that messy, unpolished version of your work that’s all you, flaws and all—and swear by the idea that it should be done in earnest before introducing any sort of external assistance, including AI.

The sooner you begin editing, the more susceptible you are to muddying the waters with AI. Much as I revere the SFD school of thought, I suppose I’m of the “edit as you go” camp in that I can’t resist prematurely enlisting the help of ChatGPT (I’m working on it). This probably makes me a hybrid writer (Level 2) at heart, leaving me somewhat vulnerable to losing touch with my own writing style and voice.

As for Level 3, it has its time and place. Specifically, it’s useful when:

You’re not looking to spend much time, and

The goal of your work is to inform rather than garner resonance.

The Utilitarian Approach can result in effective writing. But don’t expect it to leave a lasting impression on your audience. Of the eight posts I’ve published to date, only one of them was written this way. Unsurprisingly, it didn’t perform well in terms of views and engagement—but considering it took roughly an hour to produce, I’m fine with that.

Why unsurprisingly? Well, good writers craft prose that’s a pleasure to read (and AI is not inherently good at writing). Gary Provost captures this perfectly:

To the extent you’re able to maximize your AI prompting (covered in Jen Hitze’s fantastic piece), the Level 3 approach can yield quality writing. The takeaway? By clearly communicating your desired tone, depth, and cadence to your AI buddy, its output may actually read quite well—though likely at the cost of some authenticity.

Alas, the All-Hope-Is-Lost Approach. Far be it from me to dictate rules of engagement on the subject of AI usage in writing, but I struggle to see a time or place for this approach. Fortunately, those who rely on Level 4 “writing” aren’t fooling anyone—AI has a long way to go before it can genuinely capture the nuances of the human experience. No need to dwell on this approach; it’s simply not there yet.

In the end, where you land on the spectrum of AI-supplemented writing is up to you. Whether you’re raw-dogging your own creative flight or letting AI steer the plane, what matters is stewarding the creative reins with intentionality. For inexperienced writers like myself, still finding their voice and grappling with writing identity, keeping AI at bay will lead to more robust, authentic writing.

Ultimately, readers don’t want perfect—they want real, they want flawed, they want… you. So whether you’re experimenting with AI for efficiency’s sake or simply curious about its potential, remember: creativity thrives in the messy spaces that no algorithm can touch.

Honestly, I'm not sure if this is a lot or a little, but with my writing process, it takes me about one hour to write what Substack calculates as four minutes of reading time, about 870 words. Keep in mind as I write that that I don't have to write my own stories. My stories are non-fiction, so the events have already happened. All I have to do is research and put these events into a nice format that's fun to read.

In addition, everything I publish remains in the 'Shitty first draft' stage, meaning the stage of the piece after human editing but before AI editing. I've never had a piece get past that stage before. Occasionally, when I struggle to get the narrative formatted correctly, and publish a piece that's about 87% as good as it could've been, I get some FOMO. Could some AI assistance have helped it over the hump? Probably, but at what cost? I'm not sure I'm willing to take the plunge the first time, for fear of feeling the need to take the plunge every time.

In my opinion, AI writing sucks. That's strictly an opinion of mine. It isn't a universal truth, but unless the thing I'm reading is either really well done or mainly human I'm not sure I in particular will enjoy it. However, on the business side of this thing, there are visible benefits to doing less of your work yourself, but again, at what cost?

Part of me wonders about the personal fulfillment of those who write using heavy AI assistance. Is it as much as mine when I press the publish button? I can't imagine it would be, but what if it is? At that point, what have I done all this work for, and then the FOMO comes back.

This is a good piece. I'm glad I read it, but I'm not sure it made me feel good.

I love all the perspective that AI is bringing to all the writings and discussions. I am in Level 2 - The hybrid approach because there are contractors out there hiring for AI-generated contents. As a freelancer, I see things I use to frown it raking $$$$$$ for others and I have learnt to keep shut. someone once said someone that knows how to use AI will replace you before AI does and it has stuck with me specifically because I know how to use AI. I hope we don't get to all is lost phase because I am sick of people reading articles I wrote and thinking it's AI that wrote it because they are aliened to some words. That's my real fear.